Why DHTI Chains Matter: Moving Beyond Single LLM Calls in Healthcare AI (Part II)

Large Language Models (LLMs) are powerful, but a single LLM call is rarely enough for real healthcare applications. Out of the box, LLMs lack memory, cannot use tools, and cannot reliably perform multi‑step reasoning—limitations highlighted in multiple analyses of LLM‑powered systems. In clinical settings, where accuracy, context, and structured outputs matter, relying on a single prompt‑response cycle is simply not viable.

Healthcare workflows require the retrieval of patient data, contextual reasoning, validation, and often the structured transformation of model output. A single LLM call cannot orchestrate these steps. This is where chains become essential.

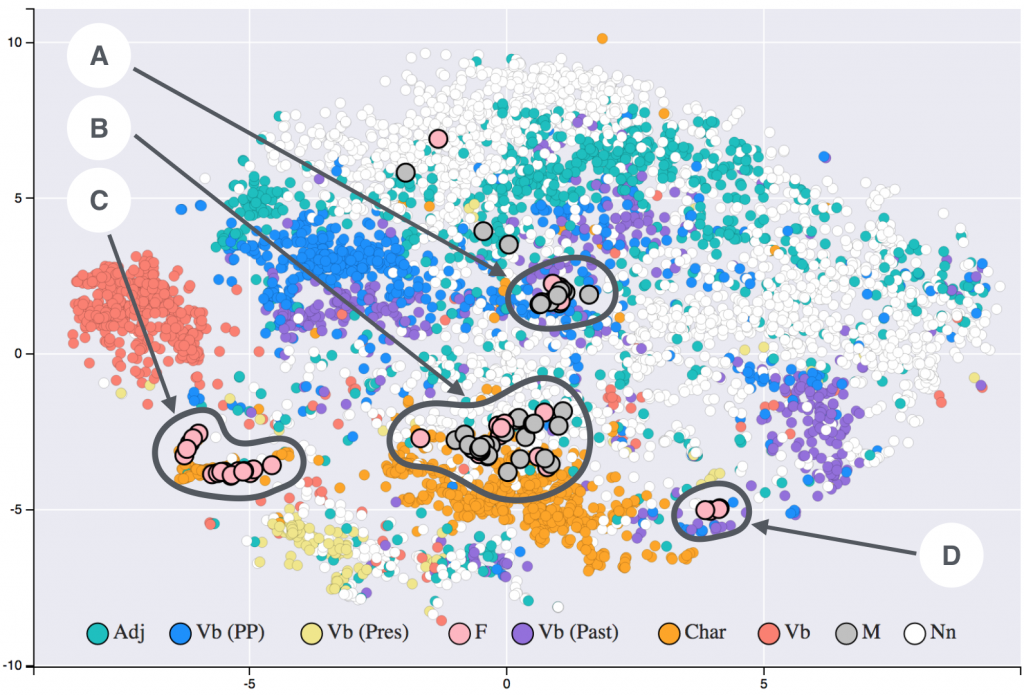

Image credit: FASING Group, CC BY-SA 4.0 https://creativecommons.org/licenses/by-sa/4.0, via Wikimedia Commons

What Are Chains, and Why Do They Matter?

A chain is a structured workflow that connects multiple steps—LLM calls, data transformations, retrieval functions, or even other chains—into a coherent pipeline. LangChain describes chains as “assembly lines for LLM workflows,” enabling multi‑step reasoning and data processing that single calls cannot achieve.

Chains allow developers to:

- Break complex tasks into smaller, reliable steps

- Enforce structure and validation

- Integrate external tools (e.g., FHIR APIs, EMR systems)

- Maintain deterministic flow in safety‑critical environments

In healthcare, this is crucial. For example, generating a patient‑specific summary may require:

- retrieving data from an EMR,

- cleaning and structuring it,

- generating a clinical narrative, and

- validating the output.

A chain handles this entire pipeline.

Sequential, Parallel, and Branch Flows

Modern LLM applications often require more than linear processing. LangChain supports three major flow types:

✅ Sequential Chains

Sequential chains run steps in order, where the output of one step becomes the input to the next. They are ideal for multi‑stage reasoning or data transformation pipelines.

✅ Parallel Chains

Parallel chains run multiple tasks at the same time—useful when extracting multiple data elements or generating multiple outputs concurrently. LangChain’s RunnableParallel enables this pattern efficiently.

✅ Branching Chains

Branch flows allow conditional logic—different paths depending on model output or data state. This is essential for clinical decision support, where logic often depends on patient‑specific conditions.

Together, these patterns allow developers to build robust, production‑grade AI systems that go far beyond simple prompt engineering.

Implementing Chains in LangChain and Hosting Them on LangServe

LangChain provides a clean, modular API for building chains, including prompt templates, LLM wrappers, and runnable components. LangServe extends this by exposing chains as FastAPI‑powered endpoints, making deployment straightforward.

This combination—LangChain + LangServe—gives developers a scalable, observable, and maintainable way to deploy multi‑step GenAI workflows.

DHTI: A Real‑World Example of Chain‑Driven Healthcare AI

DHTI embraces these patterns to build GenAI applications that integrate seamlessly with EMRs. DHTI uses:

- Chains for multi‑step reasoning

- LangServe for hosting GenAI services

- FHIR for standards‑based data retrieval

- CDS‑Hooks for embedding AI output directly into EMR workflows

This standards‑based approach ensures interoperability and makes it easy to plug GenAI into clinical environments without proprietary lock‑in. DHTI makes sharing chains remarkably simple by packaging each chain as a modular, standards‑based service that can be deployed, reused, or swapped without touching the rest of the system. Because every chain is exposed through LangServe endpoints and integrated using FHIR and CDS‑Hooks conventions, teams can share, version, and plug these chains into different EMRs or projects with minimal friction.

Explore the project here:

Try DHTI and Help Democratize GenAI in Healthcare

DHTI is open‑source, modular, and built on widely adopted standards. Whether you’re a researcher, developer, or clinician, you can use it to prototype safe, interoperable GenAI workflows that work inside real EMRs.

More examples for chains

✅ 1. Clinical Note → Problem List → ICD-10 Coding

Why chaining helps

A single LLM call struggles because:

- The task is multi‑step: extract problems → normalize → map to ICD‑10.

- Each step benefits from structured intermediate outputs.

- Errors compound if the model tries to do everything at once.

Sequential Runnable Example

Step 1: Extract the structured problem list from the free‑text note

Step 2: Normalize problems to standard clinical terminology

Step 3: Map each normalized problem to ICD‑10 codes

This mirrors real clinical coding workflows and allows validation at each step.

Sequential chain sketch

- extract_problems(note_text)

- normalize_terms(problem_list)

- map_to_icd10(normalized_terms)

✅ 2. Clinical Decision Support: Medication Recommendation With Safety Checks

Why chaining helps

A single LLM call might hallucinate or skip safety checks. A chain allows:

- Independent verification steps

- Parallel evaluation of risks

- Branching logic based on findings

Parallel Runnable Example

Given a patient with multiple comorbidities:

Parallel tasks:

- Evaluate renal dosing requirements

- Check drug–drug interactions

- Assess contraindications

- Summarize guideline‑based first‑line therapies

All run simultaneously, then merged.

Parallel chain sketch

{

renal_check: check_renal_function(patient),

ddi_check: check_drug_interactions(patient),

contraindications: check_contraindications(patient),

guideline: summarize_guidelines(condition)

}

→ combine_and_recommend()

This mirrors how pharmacists and CDS systems work: multiple independent checks feeding into a final recommendation.

✅ 3. Triage Assistant: Symptom Intake → Risk Stratification → Disposition

Why chaining helps

Triage requires conditional logic:

- If red‑flag symptoms → urgent care

- If moderate risk → telehealth

- If low risk → self‑care

A single LLM call tends to blur risk categories. A branching chain enforces structure.

Branch Runnable Example

Step 1: Extract structured symptoms

Step 2: Risk stratification

Branch:

- High risk → generate urgent-care instructions

- Medium risk → generate telehealth plan

- Low risk → generate self‑care guidance

Branch chain sketch

symptoms = extract_symptoms(input)

risk = stratify_risk(symptoms)

if risk == “high”:

return urgent_care_instructions(symptoms)

elif risk == “medium”:

return telehealth_plan(symptoms)

else:

return self_care_plan(symptoms)

This mirrors real triage protocols (e.g., Schmitt/Thompson).

✅ Summary Table

| Scenario | Why a Chain Helps | Best Runnable Pattern |

| Clinical note → ICD‑10 coding | Multi-step reasoning, structured outputs | Sequential |

| Medication recommendation with safety checks | Independent safety checks, guideline lookup | Parallel |

| Triage assistant | Conditional logic, different outputs based on risk | Branch |